When AI Hits a Red Light in Visual Reasoning: Meet ZeroBench

Artificial Intelligence (AI) is making leaps in visual reasoning, handling tasks from scientific diagram interpretation to gaming error detection. But what happens when you throw truly impossible visual challenges at it?

Enter ZeroBench, a new benchmark so brutally difficult that every tested large multi-modal model (LMM) scored a whopping 0.0% on its core questions. That’s right—zero percent. If AI had a report card, this would be the equivalent of flunking out spectacularly.

What is ZeroBench?

ZeroBench isn’t just another AI benchmark—it’s an intelligence stress test for AI’s visual reasoning capabilities. Unlike conventional benchmarks that models “solve” within months, ZeroBench is designed to be unsolvable (for now), pushing AI models to their absolute limits.

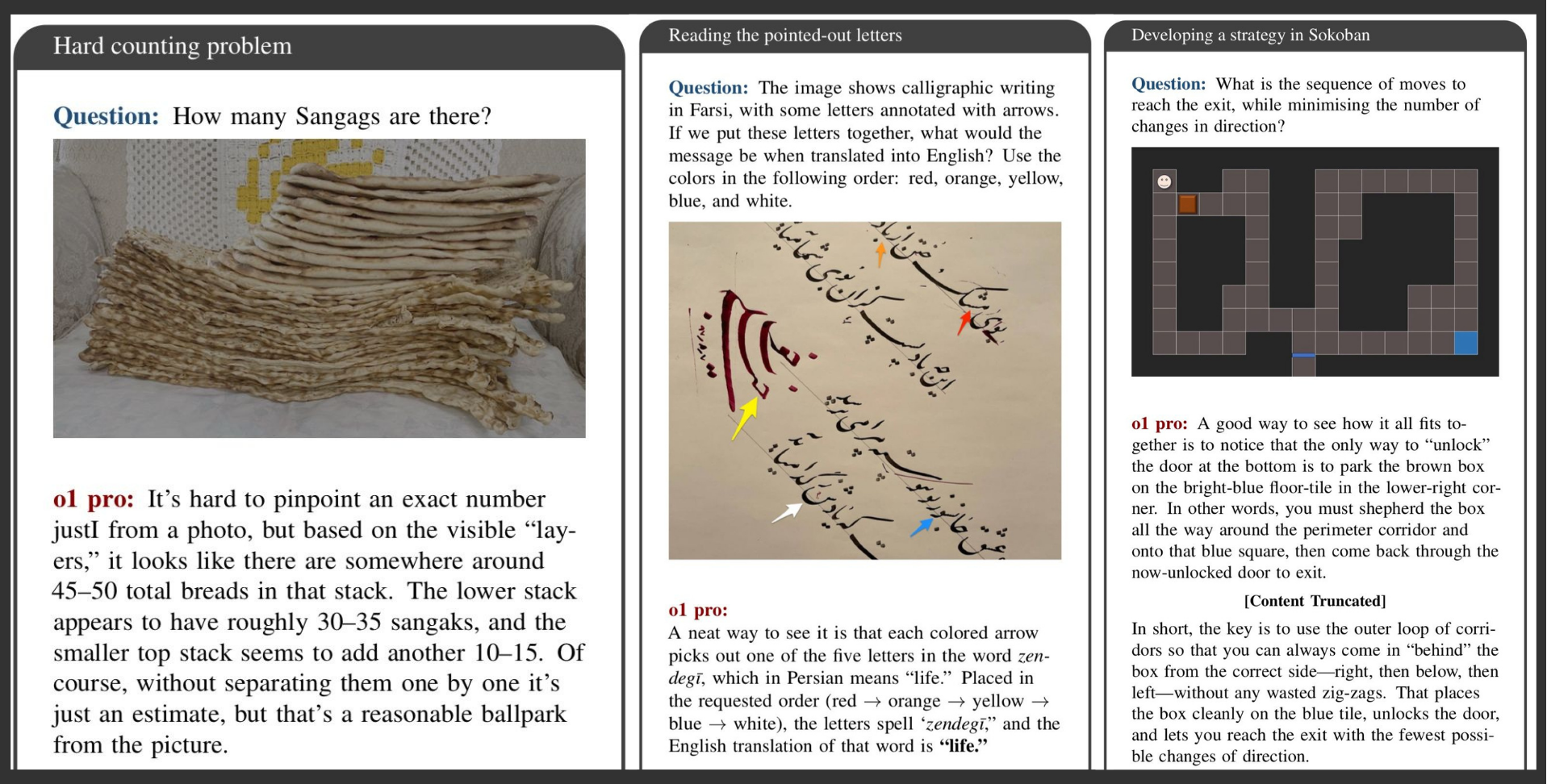

🔹 100 handcrafted, mind-bending visual reasoning tasks

🔹 Designed to exploit LMM weaknesses in spatial reasoning

🔹 Intentionally unsolvable by current AI standards

Instead of celebrating incremental AI progress, ZeroBench flips the script—highlighting gaps rather than achievements in AI’s cognitive abilities.

🚶♂️ What’s the Connection to Pedestrian Studies?

If AI fails miserably at complex spatial reasoning tasks in a controlled test, how can we expect it to navigate real-world pedestrian environments?

Imagine relying on an AI-powered pedestrian detection system based on today’s best LMMs:

👀 Human: “How many pedestrians are in the crosswalk?”

🤖 AI: “Seventeen… or maybe three. Also, is that a bicycle or a cat?”

The problem? Current AI models lack the spatial intelligence needed for transportation safety, pedestrian detection, and evacuation planning.

🔹 Misidentifying obstacles and road hazards could lead to dangerous outcomes.

🔹 Misinterpreting pedestrian intent (e.g., crossing vs. waiting) could cause accidents.

🔹 Understanding complex, dynamic environments is critical for AI-driven urban safety applications.

If AI struggles to differentiate objects in a still image, how can it be trusted to navigate fast-moving, real-world scenarios?

What’s Next?

ZeroBench isn’t just a benchmark—it’s a wake-up call for the AI community. Before AI can be deployed in critical, real-world applications, it must dramatically improve its visual-spatial reasoning.

✅ AI must improve before handling pedestrian safety applications.

✅ Visual cognition failures aren’t just funny—they’re dangerous in real-world use.

✅ AI researchers must rethink how models approach spatial reasoning & common sense.

📌 Want to see how your favorite AI models perform?

Check out ZeroBench here: https://zerobench.github.io/

Final Thoughts

🚦 AI is making remarkable progress, but ZeroBench reminds us how far it still has to go. Before we trust AI-powered pedestrian detection or urban safety systems, we need to ensure that AI can see and reason like a human—not just guess.

What do you think? Should AI be trusted in transportation before solving ZeroBench-level challenges?